About Me

AI and engineering leadership in healthtech and fintech, nonprofit founder, former researcher at Harvard.

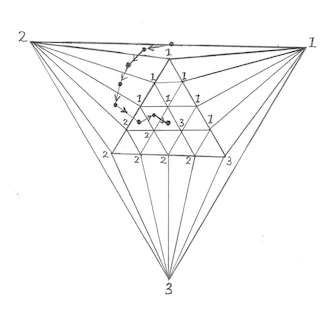

I'm Aron Szanto. I'm an AI researcher, engineer, and Recovering Math Person. Currently I serve as Head of Technology at Redesign Health, where we're on a mission to transform healthcare in service of patients through technology innovation around the world. Previously, I headed the Machine Learning Products department at PathAI, delivering AI-powered diagnostics and therapies for cancer and other diseases. Before that, I led NLP at Kensho, where we built a pioneering portfolio of language model-backed technologies used by experts around the world. I've spent my career in the healthtech and fintech industries, aiming to create AI systems to enable people performing the most critical jobs on the planet—from the CIA to the operating room. Back before Language Models got Large, I studied statistical machine learning, computational economics, and mathematical philosophy at Harvard.

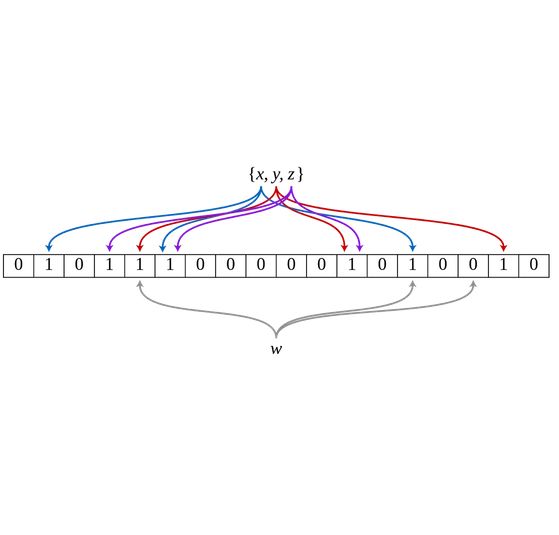

I'm passionate about growing world-class organizations that create outstanding and impactful technology. My work has focused on large language models and multimodal ML, complex disease diagnostics, fake news and misinformation detection, and human-computer hybrid systems. Some of my recent published research focuses on how fake and real news spread differently, building models to allow us to identify fake news before it diffuses widely.

When I'm not working, you'll find me playing cello in my orchestra, on the ultimate frisbee field, or hacking on an open source project. My music recordings and tech-stracurriculars are below! Other things you should know about me: my first name is properly spelled Áron and pronounced /ɑ'rõn/ (AH-rown), since my multicultural parents needed to be very authentic. I love espresso, dogs, and cooking. I'm terrible at singing, but I'm a great whistler. And though many have tried, you'll never be able to convince me that there's a city better than New York.